Web Scraping SERP pages from Google.com

Try our Google Scraper Tool Today! So, what is the easiest way to scrape Google search result pages (SERP) ?

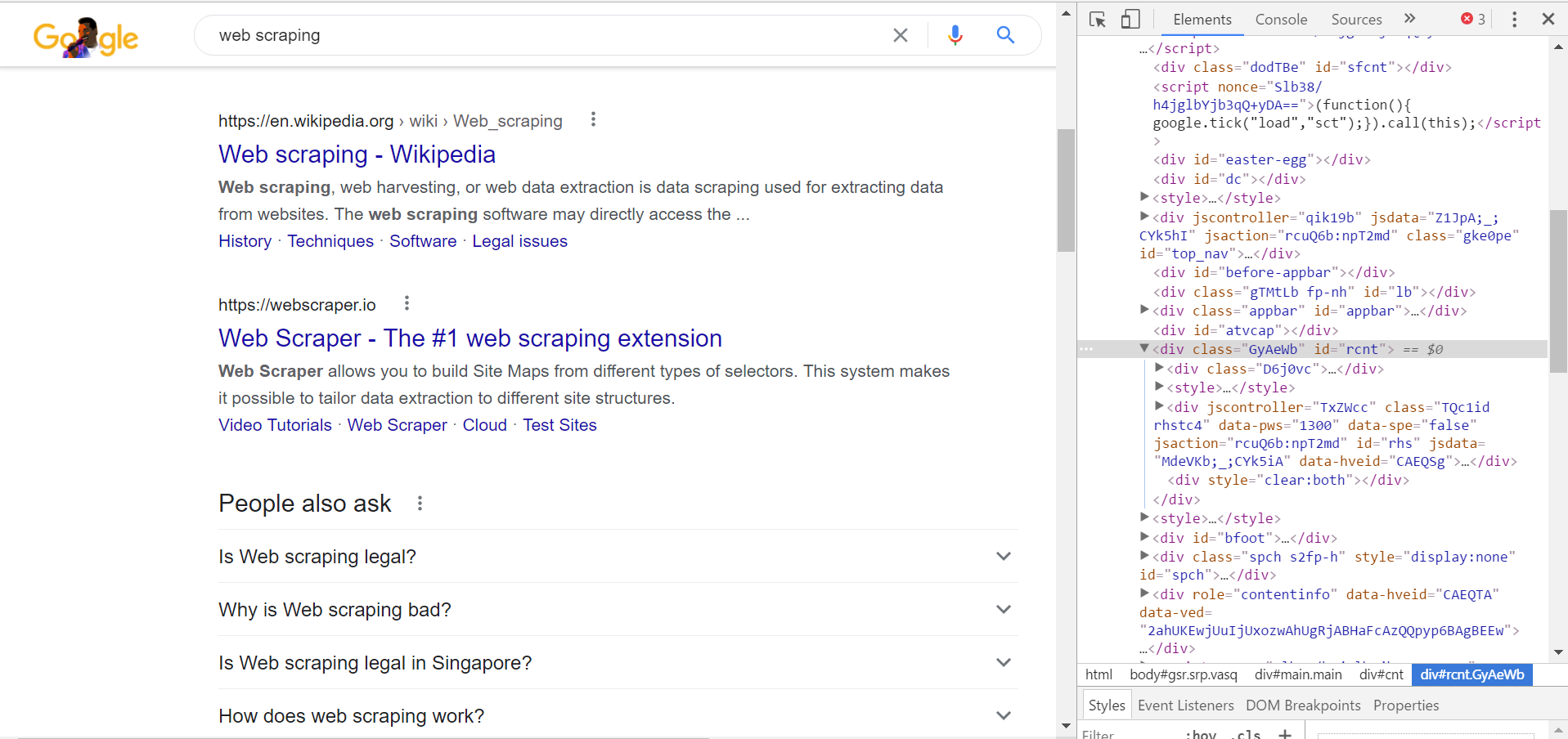

Figure 1: Inspecting the source of HTML source code for Google search result pages (SERP) webpage.

Option 1: Subscribe to Specrom’s SERP Scraper Tool

We have an Google SERP Scraper Tool that will extract all the relevant information such as SERP position, title, url, snippet, Domain authority, and Alexa ranking by simply specifying a keyword.

The free starter plan is just $1 for 7 days, and business and enterprise plans are only $39/year and $99/year.

This option can allow you to fetch results for hundreds of keywords as CSV file.

This tool is perfect since it handles solving CAPTCHA or rotating proxy IP addresses so you get the data you need without any additional work.

Option 2: Scrape Google.com on your own

Python is great for web scraping and we will be using a library called Selenium to extract results for the keywords “web scraping”.

Fetching raw html page from the Google.com

We will automate entering of search query into the textbox and clicking enter using Selenium

### Using Selenium to extract Google.com's raw html source

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import Select

import time

from bs4 import BeautifulSoup

import numpy as np

import pandas as pd

test_url = 'https://www.google.com'

option = webdriver.ChromeOptions()

option.add_argument("--incognito")

chromedriver = r'chromedriver.exe'

browser = webdriver.Chrome(chromedriver, options=option)

browser.get(test_url)

text_area = browser.find_element_by_id('gLFyf gsfi')

text_area.send_keys("Web scraping")

element = browser.find_element_by_xpath('//*[@id="tsf"]/div[1]/div[1]/div[2]/button/div/span')

element.click()

html_source = browser.page_source

browser.close()

Using BeautifulSoup to extract Google SERP data

Once we have the raw html source, we should use a Python library called BeautifulSoup for parsing the raw html files.

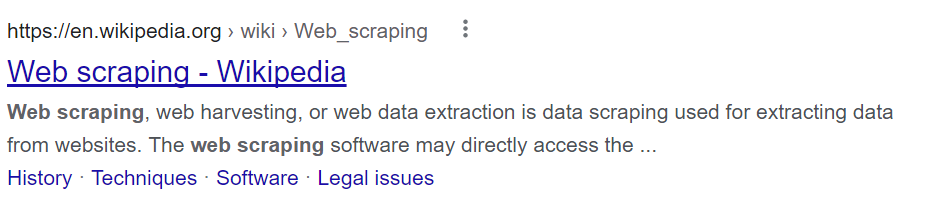

As a reference, refer to the figure below.

Figure 2: individual search results from Google SERP page.

Extracting titles for each SERP result

From inspecting the html source, we see that titles have h3 tags and belong to class ‘LC20lb DKV0Md’.

# extracting titles

soup=BeautifulSoup(html_source, "html.parser")

serp_title_src = soup.find_all('h3', {'class','LC20lb DKV0Md'})

serp_title_list = []

for val in serp_title_src:

try:

serp_title_list.append(val.get_text())

except:

pass

serp_title_list

#Output

['Web scraping - Wikipedia',

'Web Scraper - The #1 web scraping extension',

'Beautiful Soup: Build a Web Scraper With Python – Real Python',

'What is Web Scraping and What is it Used For? | ParseHub',

'ParseHub | Free web scraping - The most powerful web scraper',

'Tutorial: Web Scraping with Python Using Beautiful Soup – Dataquest',

'Web Scraper - Free Web Scraping',

'Web Scraping Explained - WebHarvy',

'Web Scraping with Python - Beautiful Soup Crash Course - YouTube',

'What Is Web Scraping and 5 Findings about Web Scraping | Octoparse',

'What Is Web Scraping And How Does Web Crawling Work?',

'Data Scraping | Web Scraping | Screen Scraping | Extract - Import.io',

'Web Scraping Made Easy: The Full Tutorial - OutSystems',

'Web scraping with JS | Analog Forest',

'Web Scraping, Data Extraction and Automation · Apify',

'Web Scraping with Python: Everything you need to know (2021)',

'What is Web Scraping and How to Use It? - GeeksforGeeks',

'Web Scraping: The Comprehensive Guide for 2020 – ProWebScraper',

'Introduction to web scraping - Library Carpentry']

Extracting URLs

The next step is extracting URLs of individual SERP results. We see that it is div tag of class ‘yuRUbf’.

# extracting URLs from Google SERP results

URLs_src = soup.find_all('div',{'class', 'yuRUbf'})

url_list = []

for val in URLs_src:

url_list.append(val.find('a')['href'])

url_list

# Output

['https://en.wikipedia.org/wiki/Web_scraping',

'https://webscraper.io/',

'https://realpython.com/beautiful-soup-web-scraper-python/',

'https://www.parsehub.com/blog/what-is-web-scraping/',

'https://www.parsehub.com/',

'https://www.dataquest.io/blog/web-scraping-python-using-beautiful-soup/',

'https://chrome.google.com/webstore/detail/web-scraper-free-web-scra/jnhgnonknehpejjnehehllkliplmbmhn%3Fhl%3Den',

'https://www.webharvy.com/articles/what-is-web-scraping.html',

'https://www.youtube.com/watch%3Fv%3DXVv6mJpFOb0',

'https://www.octoparse.com/blog/what-is-web-scraping',

'https://www.zyte.com/learn/what-is-web-scraping/',

'https://www.import.io/product/extract/',

'https://www.outsystems.com/blog/posts/web-scraping-tutorial/',

'https://qoob.cc/web-scraping/',

'https://apify.com/',

'https://www.scrapingbee.com/blog/web-scraping-101-with-python/',

'https://www.geeksforgeeks.org/what-is-web-scraping-and-how-to-use-it/',

'https://prowebscraper.com/blog/what-is-web-scraping/',

'https://librarycarpentry.org/lc-webscraping/']

Extracting snippets

Snippets are couple of sentences of text that briefly explain the content in each of the result webpages.

Along with title and URL, snippets are one of the most important pieces of information to extract from each Google SERP page.

- We will extract snippet for each job postings. For brevity we will only show results from first three results, and you can verify that the first result matches the text in figure 2 above.

# extracting snippets from each SERP listing

snippet_src = soup.find_all('span', {'class', 'aCOpRe'})

snippet_list = []

for val in snippet_src:

snippet_list.append(val.get_text())

snippet_list[:3]

# Output

['Web scraping, web harvesting, or web data extraction is data scraping used for extracting data from websites. The web scraping software may directly access the \xa0...\nHistory · Techniques · Software · Legal issues',

'Web Scraper allows you to build Site Maps from different types of selectors. This system makes it possible to tailor data extraction to different site structures.\nVideo Tutorials · Web Scraper · Cloud · Test Sites',

'What Is Web Scraping? Web scraping is the process of gathering information from the Internet. Even copy-pasting the lyrics of your favorite song is a form of web\xa0...']

Converting into CSV file

You can take the lists above, and read it as a pandas DataFrame. Once you have the Dataframe, you can convert to CSV, Excel or JSON easily without any issues.

Scaling up to a full crawler for extracting Google results in bulk

Once you scale up to make thousands of requests to fetch all the pages, the Google.com servers will start blocking your IP address outright or you will be flagged and will start getting CAPTCHA.

To make it more likely to successfully fetch data for all USA, you will have to implement:

- rotating proxy IP addresses preferably using residential proxies.

- rotate user agents

- Use an external CAPTCHA solving service like 2captcha or anticaptcha.com

After you follow all the steps above, you will realize that our pricing for managed web scraping or our Google scraper API is one of the most competitive in the market.