Web scraping for Marshalls stores location data

So, what is the easiest way to get CSV file of all the Marshalls store locations data in the USA?

Buy the Marshalls store data from our data store

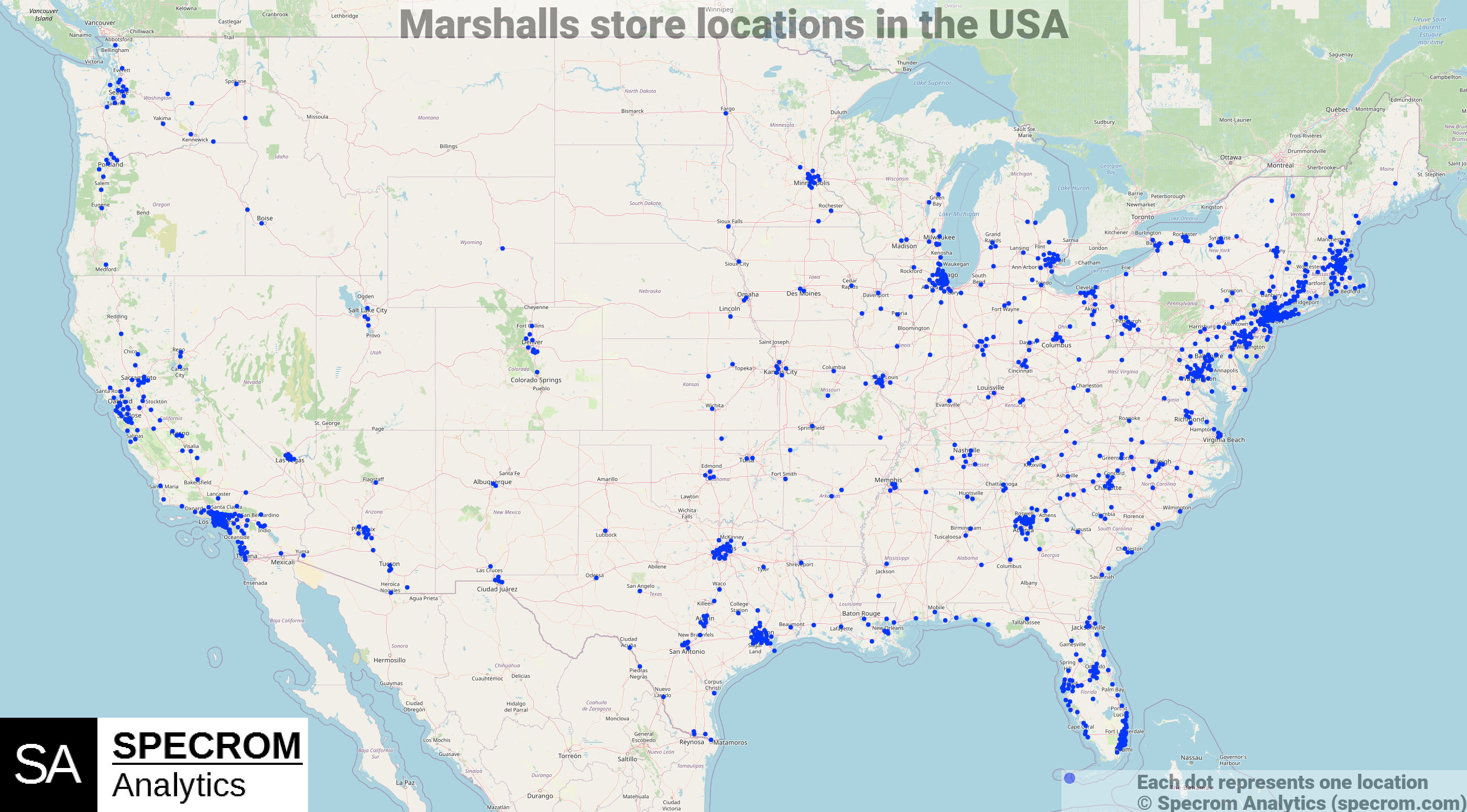

There are over 1000 Marshalls stores in USA and you buy the CSV file containing address, city, zip, latitude, longitude of each location in our data store for $50.

Figure 1: Marshalls store locations. Source: Marshalls Store Locations dataset

If you are instead interested in scraping for locations on your own than continue reading rest of the article.

Scraping Marshalls stores locator using Python

We will keep things simple for now and try to web scrape Marshalls store locations for only one zipcode.

Python is great for web scraping and we will be using a library called Selenium to extract Marshalls store locator’s raw html source for zipcode 30301 (Atlanta, GA).

- Fetching raw html page from the Marshalls store locator page for individual zip codes or cities in USA

### Using Selenium to extract Marshalls store locator's raw html source

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import Select

import time

from bs4 import BeautifulSoup

import numpy as np

import pandas as pd

query_url = 'https://www.marshalls.com/us/store/stores/storeLocator.jsp'

option = webdriver.ChromeOptions()

option.add_argument("--incognito")

chromedriver = r'chromedriver_path'

browser = webdriver.Chrome(chromedriver, options=option)

browser.get(test_url)

text_area = browser.find_element_by_id('store-location-zip')

text_area.send_keys("30301")

element = browser.find_element_by_xpath('//*[@id="findStoresForm"]/div[3]/input[9]')

element.click()

time.sleep(5)

html_source = browser.page_source

browser.close()

Using BeautifulSoup to extract Marshalls store details

Once we have the raw html source, we should use a Python library called BeautifulSoup for parsing the raw html files.

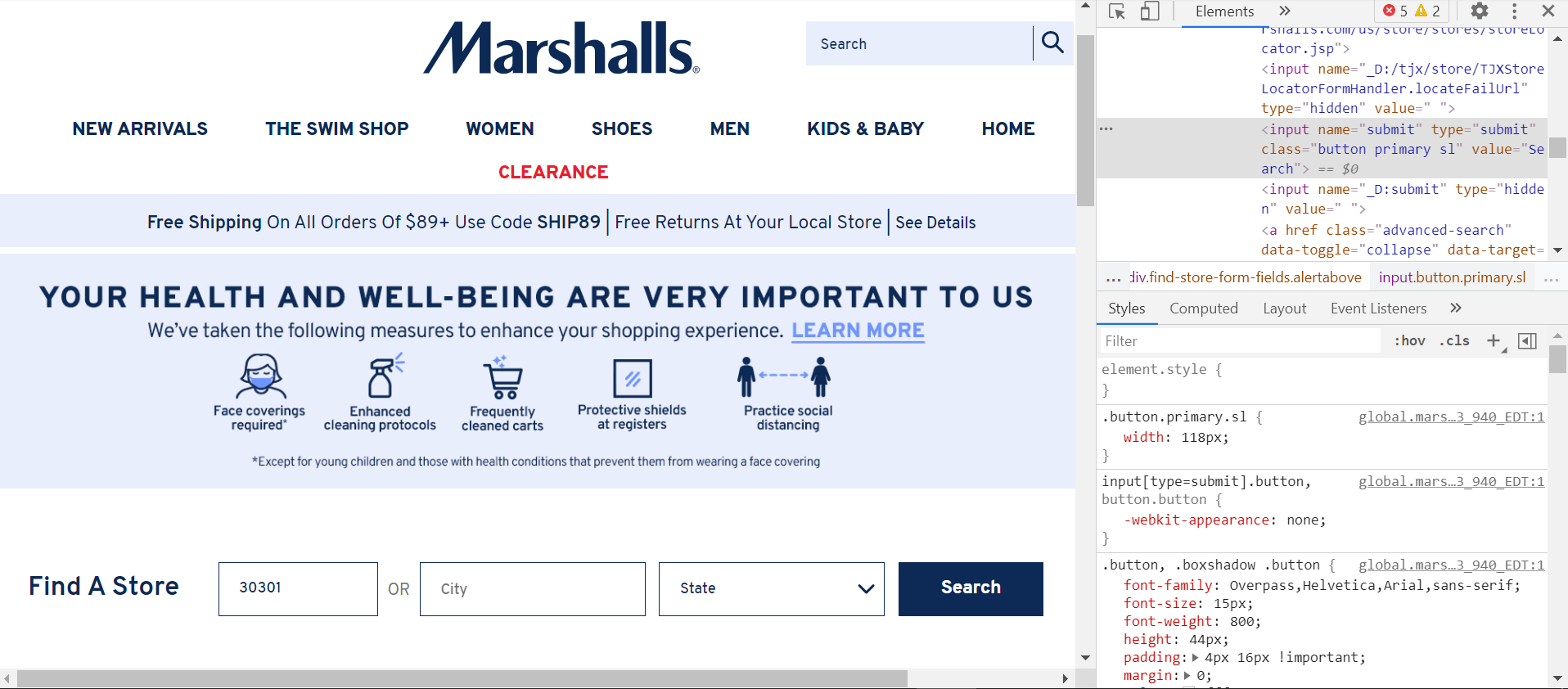

- You should open the page in the chrome browser and click inspect.

Figure 2: Inspecting the source of Marshalls store locator.

Extracting store names

As a first step, we will start by extracting each store names

# extracting Marshalls store names

store_name_list_src = soup.find_all('h3', {'class','address-heading fn org'})

store_name_list = []

for val in store_name_list_src:

try:

store_name_list.append(val.get_text())

except:

pass

store_name_list[:10]

#Output

['East Point',

'College Park',

'Fayetteville',

'Atlanta',

'Decatur',

'Atlanta',

'Atlanta (Brookhaven)',

'McDonough',

'Smyrna',

'Lithonia']

Extracting Marshall store addresses

Look at inspect in the chrome browser to find tags and class names for each address.

# extracting marshalls addresses

addresses_src = soup.find_all('div',{'class', 'adr'})

addresses_src

address_list = []

for val in addresses_src:

address_list.append(val.get_text())

address_list[:10]

# Output

['\n3606 Market Place Blvd.\nEast Point,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30344\n',

'\n 6385 Old National Hwy \nCollege Park,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30349\n',

'\n109 Pavilion Parkway\nFayetteville,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30214\n',

'\n2625 Piedmont Road NE\nAtlanta,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30324\n',

'\n2050 Lawrenceville Hwy\nDecatur,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30033\n',

'\n3232 Peachtree St. N.E. \nAtlanta,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30305\n',

'\n150 Brookhaven Ave\nAtlanta (Brookhaven),\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30319\n',

'\n1930 Jonesboro Road\nMcDonough,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30253\n',

'\n2540 Hargroves Rd.\nSmyrna,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30080\n',

'\n8080 Mall Parkway \nLithonia,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30038\n']

Extract phone numbers

We will extract phone numbers.

# extracting phone numbers

phone_src = soup.find_all('div',{'class', 'tel'})

phone_list = []

for val in phone_src:

phone_list.append(val.get_text())

phone_list[:10]

# Output

['404-344-6703',

'770-996-4028',

'770-719-4699',

'404-233-3848',

'404-636-5732',

'404-365-8155',

'404-848-9447',

'678-583-6122',

'770-436-6061',

'678 526-0662']

Extracting individual Marshalls store URL

Each local store location has its own URL where additional information is available.

# extracting store url for each store

store_list_src = soup.find_all('li',{'class','store-list-item vcard address'})

store_list = []

for val in store_list_src:

try:

store_list.append(val.find('a')['href'])

except:

pass

store_list[:10]

#Output

['https://www.marshalls.com/us/store/stores/East+Point-GA-30344/463/aboutstore',

'https://www.marshalls.com/us/store/stores/College+Park-GA-30349/1207/aboutstore',

'https://www.marshalls.com/us/store/stores/Fayetteville-GA-30214/687/aboutstore',

'https://www.marshalls.com/us/store/stores/Atlanta-GA-30324/353/aboutstore',

'https://www.marshalls.com/us/store/stores/Decatur-GA-30033/1098/aboutstore',

'https://www.marshalls.com/us/store/stores/Atlanta-GA-30305/621/aboutstore',

'https://www.marshalls.com/us/store/stores/Atlanta+Brookhaven-GA-30319/49/aboutstore',

'https://www.marshalls.com/us/store/stores/McDonough-GA-30253/859/aboutstore',

'https://www.marshalls.com/us/store/stores/Smyrna-GA-30080/644/aboutstore',

'https://www.marshalls.com/us/store/stores/Lithonia-GA-30038/836/aboutstore']

Geo-encoding

You will need latitudes and longitudes of each stores if you want to plot it on map like figure 1.

Lats and longs are also necessary to calculate distances between points, driving radius etc. all of which are important part of location analysis.

We recommend that you use a robust geocoding service like Google maps to convert the address into coordinates (latitudes and longitudes). It costs $5 for 1000 addresses but in our view its totally worth it.

There are some free alternatives for geocoding based on openstreetmaps but none that matches the accuracy of Google maps.

Scaling up to a full crawler for extracting all Marshalls store locations in USA

Once you have the above scraper that can extract data for one zipcode/city, you will have to iterate through all the US zip codes.

it depends on how much coverage you want, but for a national chain like Marshalls you are looking at running the above function 100,000 times or more to ensure that no region is left out.

Once you scale up to make thousands of requests, the Marshalls.com servers will start blocking your IP address outright or you will be flagged and will start getting CAPTCHA.

To make it more likely to successfully fetch data for all USA, you will have to implement:

- rotating proxy IP addresses preferably using residential proxies.

- rotate user agents

- Use an external CAPTCHA solving service like 2captcha or anticaptcha.com

After you follow all the steps above, you will realize that our pricing($50) for web scraped store locations data for all Marshalls stores locations dataset is one of the most competitive in the market.